Modeling Agents with Probabilistic Programs

This book describes and implements models of rational agents for (PO)MDPs and Reinforcement Learning. One motivation is to create richer models of human planning, which capture human biases and bounded rationality.

Agents are implemented as differentiable functional programs in a probabilistic programming language based on Javascript. Agents plan by recursively simulating their future selves or by simulating their opponents in multi-agent games. Our agents and environments run directly in the browser and are easy to modify and extend.

The book assumes basic programming experience but is otherwise self-contained. It includes short introductions to “planning as inference”, MDPs, POMDPs, inverse reinforcement learning, hyperbolic discounting, myopic planning, and multi-agent planning.

For more information about this project, contact Owain Evans.

Table of contents

-

Introduction

Motivating the problem of modeling human planning and inference using rich computational models. -

Probabilistic programming in WebPPL

WebPPL is a functional subset of Javascript with automatic Bayesian inference via MCMC and gradient-based variational inference. -

Agents as probabilistic programs

One-shot decision problems, expected utility, softmax choice and Monty Hall.-

Sequential decision problems: MDPs

Markov Decision Processes, efficient planning with dynamic programming. -

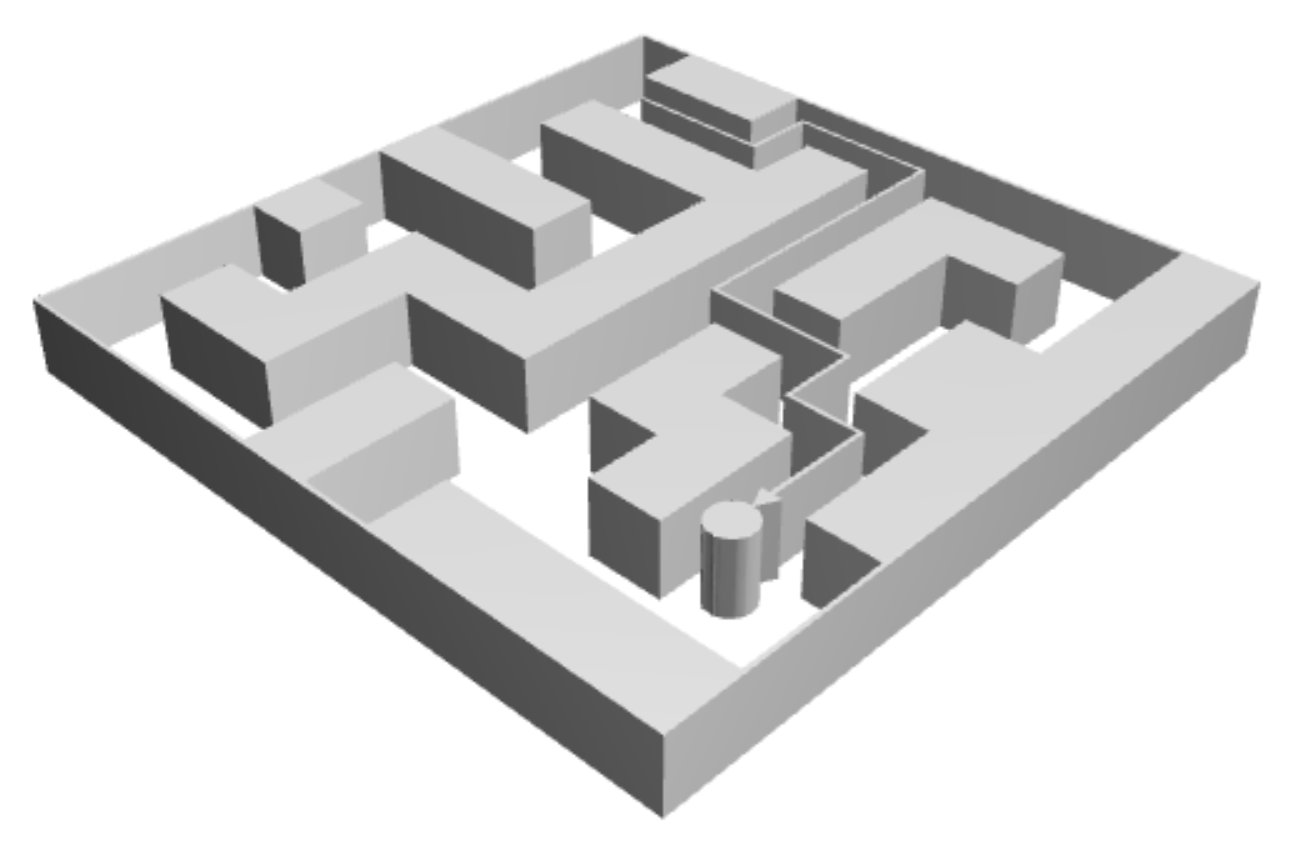

MDPs and Gridworld in WebPPL

Noisy actions (softmax), stochastic transitions, policies, Q-values. -

Environments with hidden state: POMDPs

Mathematical formalism for POMDPs, Bandit and Restaurant Choice examples. -

Reinforcement Learning to Learn MDPs

RL for Bandits, Thomson Sampling for learning MDPs.

-

-

Reasoning about agents

Overview of Inverse Reinforcement Learning. Inferring utilities and beliefs from choices in Gridworld and Bandits. -

Cognitive biases and bounded rationality

Soft-max noise, limited memory, heuristics and biases, motivation from intractability of POMDPs.-

Time inconsistency I

Exponential vs. hyperbolic discounting, Naive vs. Sophisticated planning. -

Time inconsistency II

Formal model of time-inconsistent agent, Gridworld and Procrastination examples. -

Bounded Agents– Myopia for rewards and updates

Heuristic POMDP algorithms that assume a short horizon. -

Joint inference of biases and preferences I

Assuming agent optimality leads to mistakes in inference. Procrastination and Bandit Examples. -

Joint inference of biases and preferences II

Explaining temptation and pre-commitment using softmax noise and hyperbolic discounting.

-

-

Multi-agent models

Schelling coordination games, tic-tac-toe, and a simple natural-language example. -

Quick-start guide to the webppl-agents library

Create your own MDPs and POMDPs. Create gridworlds and k-armed bandits. Use agents from the library and create your own.

Citation

Please cite this book as:

Owain Evans, Andreas Stuhlmüller, John Salvatier, and Daniel Filan (electronic). Modeling Agents with Probabilistic Programs. Retrieved from http://agentmodels.org. [bibtex]

@misc{agentmodels,

title = {{Modeling Agents with Probabilistic Programs}},

author = {Evans, Owain and Stuhlm\"{u}ller, Andreas and Salvatier, John and Filan, Daniel},

year = {2017},

howpublished = {\url{http://agentmodels.org}},

note = {Accessed: }

}

Open source

- Book content

Markdown code for the book chapters - WebPPL

A probabilistic programming language for the web - WebPPL-Agents

A library for modeling MDP and POMDP agents in WebPPL

Acknowledgments

We thank Noah Goodman for helpful discussions, all WebPPL contributors for their work, and Long Ouyang for webppl-viz. This work was supported by Future of Life Institute grant 2015-144846 and by the Future of Humanity Institute (Oxford).